Regression Techniques for Predictive Analysis#

Week 5 materials can be accessed here. Following our exploration of classification tasks in machine learning, such as distinguishing between sea ice and open water, we now shift our focus to regression techniques. Regression analysis is pivotal in predictive modeling, allowing us to estimate continuous outcomes. For example, this could include predicting lead fraction and melt pond fraction from optical satellite data. We will examine three distinct regression methods, each with its unique advantages and applicability to different types of data:

Polynomial Regression: An extension of linear regression that models the relationship between the response variable and polynomial features of the predictors. It is particularly useful when the relationship between variables is non-linear.

Neural Networks: These versatile and powerful models can capture complex patterns in data, making them suitable for a wide range of problems, including those with high-dimensional inputs such as multispectral or hyperspectral imagery.

Gaussian Processes: A probabilistic approach that provides not only predictions but also a measure of uncertainty, which can be crucial when dealing with sparse or noisy data, as is often the case in remote sensing.

By comparing these methods, we aim to understand their strengths and limitations in the context of geospatial analysis and find the best fit for our specific application in monitoring the polar regions.

Polynomial Regression [Draper and Smith, 1998]#

Introduction to Polynomial Regression#

Polynomial regression is a form of regression analysis in which the relationship between the independent variable \(x\) and the dependent variable \(y\) is modeled as an \(n\) th degree polynomial. Polynomial regression fits a nonlinear relationship between the value of \(x\) and the corresponding conditional mean of \(y\), denoted \(E(y |x)\).

Why Polynomial Regression?#

Polynomial regression can be used in situations where the relationship between the independent and dependent variables is nonlinear. It can model the curve in the data by adding higher degree terms of an independent variable, which is a straightforward way to model nonlinearity.

Key Components of Polynomial Regression#

Polynomial Terms: Introduces polynomial terms (\(x^2, x^3, \ldots, x^n \)) into a linear regression model, capturing the non-linear relationship between \( x \) and \( y \).

Degree of Polynomial: The degree \( n \) of the polynomial regression determines the flexibility of the model to fit the data. The higher the degree, the more flexible the model.

Model Fitting: The polynomial regression model is fitted using the method of least squares, which minimizes the sum of the squares of the differences between the observed and predicted values.

Understanding Polynomial Regression#

The model assumes that the relationship between variables can be described as a polynomial of degree \(n\):

where:

\(y\) is the response variable.

\(x\) is the predictor variable.

\(\beta_0, \beta_1, \ldots, \beta_n\) are the model coefficients.

\(\epsilon\) is the error term, capturing the deviation from the model.

Advantages of Polynomial Regression#

Flexibility: Can fit a wide range of curvatures in the data.

Interpretability: Coefficients can be easily interpreted as the rate of change in \(y\) for a unit change in \(x\) when all other predictors are held constant.

Basic Code Implementation#

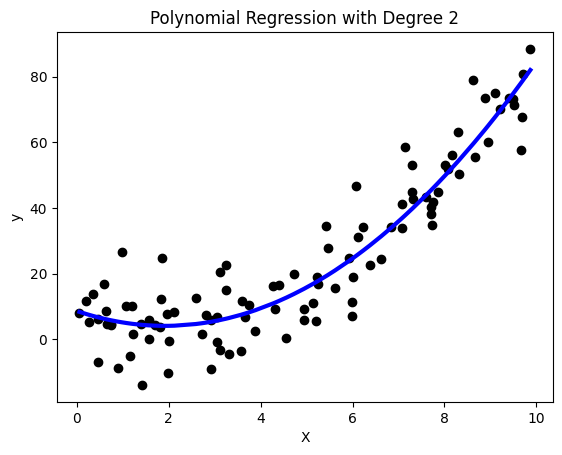

Below is a simple implementation of polynomial regression using scikit-learn’s PolynomialFeatures and LinearRegression classes. This illustrates how to fit a polynomial regression model to a dataset.

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LinearRegression

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(42)

X = np.random.rand(100, 1) * 10 # Predictor variable

y = 3 - 2 * X + X ** 2 + np.random.randn(100, 1) * 10 # Response variable

# Transforming the data to include polynomial terms

polynomial_features = PolynomialFeatures(degree=2)

X_poly = polynomial_features.fit_transform(X)

# Polynomial Regression model

model = LinearRegression()

model.fit(X_poly, y)

y_pred = model.predict(X_poly)

X_sorted, y_pred_sorted = zip(*sorted(zip(X.flatten(), y_pred.flatten())))

# Plot the results

plt.scatter(X, y, color='black') # Scatter plot of data points

plt.plot(X_sorted, y_pred_sorted, color='blue', linewidth=3) # Sorted regression line

plt.title('Polynomial Regression with Degree 2')

plt.xlabel('X')

plt.ylabel('y')

plt.show()

Neural Networks [Goodfellow et al., 2016]#

Introduction to Neural Networks#

Neural Networks are a set of algorithms, inspired by the human brain, designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling, or clustering raw input. The patterns they recognize are numerical, contained in vectors, into which all real-world data, be it images, sound, text, or time series, must be translated.

Why Neural Networks for Regression and Classification?#

Neural networks are particularly effective for:

Handling High Dimensionality: They can manage data with high dimensionality (like images) and extract patterns or features.

Flexibility: Neural networks can be applied to a wide range of tasks, including both regression and classification.

Key Components of Neural Networks#

Layers: Composed of neurons, layers are the fundamental units of neural networks. A fully connected network consists of input, hidden, and output layers.

Neurons: Each neuron in a layer is connected to all neurons in the previous and next layers, processing the input data and passing on its output.

Weights and Biases: These parameters are adjusted during training to minimize the network’s error in predicting the target variable.

Activation Functions: Functions like ReLU or Sigmoid introduce non-linearities, allowing the network to model complex relationships.

Understanding Fully Connected Neural Networks#

Fully connected neural networks consist of dense layers where each neuron in one layer is connected to all neurons in the next layer. The depth (number of layers) and width (number of neurons per layer) can be adjusted to increase the network’s capacity.

Advantages of Neural Networks#

Adaptability: They can model complex non-linear relationships.

Scalability: Effective for large datasets and high-dimensional data.

Basic Code Implementation#

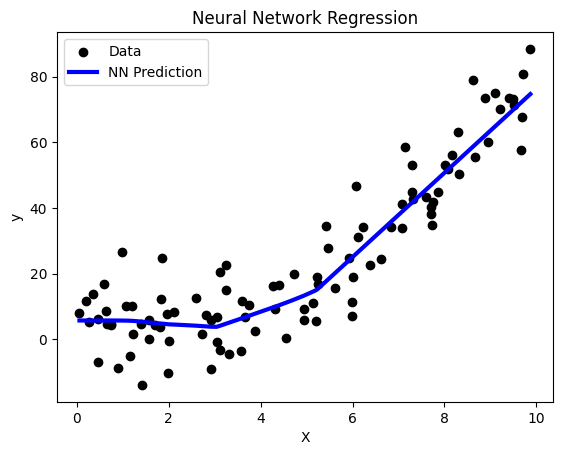

Below is a basic example of implementing a neural network using TensorFlow and Keras. This example illustrates a simple network for regression or classification tasks.

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(42)

X = np.random.rand(100, 1) * 10

y = 3 - 2 * X + X ** 2 + np.random.randn(100, 1) * 10

model = Sequential([

Dense(64, activation='relu', input_shape=(1,)), # Input layer

Dense(64, activation='relu'), # Hidden layer

Dense(1) # Output layer (regression)

])

model.compile(optimizer='adam', loss='mean_squared_error')

model.fit(X, y, epochs=500) # Train for more epochs for better fitting

y_pred = model.predict(X)

X_sorted, y_pred_sorted = zip(*sorted(zip(X.flatten(), y_pred.flatten())))

plt.scatter(X, y, color='black', label='Data') # Original data points

plt.plot(X_sorted, y_pred_sorted, color='blue', linewidth=3, label='NN Prediction') # NN prediction curve

plt.title('Neural Network Regression')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.show()

# Model summary

model.summary()

Epoch 1/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 2s 99ms/step - loss: 1348.2933

Epoch 2/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1125.9036

Epoch 3/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1147.6815

Epoch 4/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1180.8597

Epoch 5/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1163.9730

Epoch 6/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 1076.6823

Epoch 7/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1148.9847

Epoch 8/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 994.2847

Epoch 9/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1017.1741

Epoch 10/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 888.4923

Epoch 11/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 914.9472

Epoch 12/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 955.9277

Epoch 13/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 872.2428

Epoch 14/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 875.6389

Epoch 15/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 729.8569

Epoch 16/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 755.6708

Epoch 17/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 656.2883

Epoch 18/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 691.6792

Epoch 19/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 649.5696

Epoch 20/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 561.3407

Epoch 21/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 487.5810

Epoch 22/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 429.9055

Epoch 23/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 371.5895

Epoch 24/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 325.0286

Epoch 25/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 309.2191

Epoch 26/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 306.5823

Epoch 27/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 227.8593

Epoch 28/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 231.6555

Epoch 29/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 252.8169

Epoch 30/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 221.2072

Epoch 31/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 214.0485

Epoch 32/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 7ms/step - loss: 207.3493

Epoch 33/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 213.0117

Epoch 34/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 211.3900

Epoch 35/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 221.9992

Epoch 36/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 209.5351

Epoch 37/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 207.9855

Epoch 38/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.0653

Epoch 39/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.6176

Epoch 40/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 201.8601

Epoch 41/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 197.8163

Epoch 42/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 196.6881

Epoch 43/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 181.8983

Epoch 44/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 203.6775

Epoch 45/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 200.8480

Epoch 46/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 195.0266

Epoch 47/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 218.8404

Epoch 48/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 196.1528

Epoch 49/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 187.7216

Epoch 50/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 192.9079

Epoch 51/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 202.6926

Epoch 52/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 213.3942

Epoch 53/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.7045

Epoch 54/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 7ms/step - loss: 195.6889

Epoch 55/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 203.4588

Epoch 56/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 185.9966

Epoch 57/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 182.1139

Epoch 58/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 208.0919

Epoch 59/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 169.5721

Epoch 60/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 184.6493

Epoch 61/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 177.9846

Epoch 62/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 211.6867

Epoch 63/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 197.1788

Epoch 64/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 211.8933

Epoch 65/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.8136

Epoch 66/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 181.8359

Epoch 67/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 176.9122

Epoch 68/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 178.5719

Epoch 69/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 179.8079

Epoch 70/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 205.5344

Epoch 71/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.2767

Epoch 72/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 209.6220

Epoch 73/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 198.0446

Epoch 74/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 204.0407

Epoch 75/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 197.5825

Epoch 76/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.6657

Epoch 77/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 188.5509

Epoch 78/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 201.6544

Epoch 79/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 203.2868

Epoch 80/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 186.7273

Epoch 81/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 200.8702

Epoch 82/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 193.9073

Epoch 83/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 181.4324

Epoch 84/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 181.7128

Epoch 85/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 177.6214

Epoch 86/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 193.3690

Epoch 87/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 173.1767

Epoch 88/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 189.1731

Epoch 89/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 163.8121

Epoch 90/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 187.2299

Epoch 91/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 192.3881

Epoch 92/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 174.2329

Epoch 93/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 195.8189

Epoch 94/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 193.0002

Epoch 95/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 175.2845

Epoch 96/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 186.5186

Epoch 97/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 175.0652

Epoch 98/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 173.3465

Epoch 99/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 191.9800

Epoch 100/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 177.1145

Epoch 101/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 173.5767

Epoch 102/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 184.0026

Epoch 103/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 184.1165

Epoch 104/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 161.3027

Epoch 105/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 192.7062

Epoch 106/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 188.8229

Epoch 107/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 173.6520

Epoch 108/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 183.7647

Epoch 109/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 183.9482

Epoch 110/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 187.2189

Epoch 111/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 161.2155

Epoch 112/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 164.6135

Epoch 113/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 185.1586

Epoch 114/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 181.9656

Epoch 115/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 176.5415

Epoch 116/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 187.0962

Epoch 117/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 185.2616

Epoch 118/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 187.9402

Epoch 119/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 181.6579

Epoch 120/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 172.0333

Epoch 121/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 176.0543

Epoch 122/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 176.5240

Epoch 123/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 162.5377

Epoch 124/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 161.2802

Epoch 125/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 162.4787

Epoch 126/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 182.1896

Epoch 127/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 175.7572

Epoch 128/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 168.0363

Epoch 129/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 157.6400

Epoch 130/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 169.3382

Epoch 131/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 157.8499

Epoch 132/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 164.4333

Epoch 133/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 166.6055

Epoch 134/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 174.7898

Epoch 135/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 147.2035

Epoch 136/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 152.8576

Epoch 137/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 150.6494

Epoch 138/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 148.5509

Epoch 139/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 167.9972

Epoch 140/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 174.1908

Epoch 141/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 158.7047

Epoch 142/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 151.7227

Epoch 143/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 164.1060

Epoch 144/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 182.6150

Epoch 145/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 169.4367

Epoch 146/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 154.6852

Epoch 147/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 164.4226

Epoch 148/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 151.5974

Epoch 149/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 164.0293

Epoch 150/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 162.3625

Epoch 151/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 145.8657

Epoch 152/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 156.0357

Epoch 153/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 158.9447

Epoch 154/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 160.3030

Epoch 155/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 161.3412

Epoch 156/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 156.6811

Epoch 157/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 164.1121

Epoch 158/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 150.0878

Epoch 159/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 157.1203

Epoch 160/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 162.4398

Epoch 161/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 157.7368

Epoch 162/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 148.5624

Epoch 163/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 156.1718

Epoch 164/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 156.1740

Epoch 165/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 128.4568

Epoch 166/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 148.2636

Epoch 167/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 155.6284

Epoch 168/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 153.2490

Epoch 169/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 138.7116

Epoch 170/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 147.9644

Epoch 171/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 155.5899

Epoch 172/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 157.8882

Epoch 173/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 145.3437

Epoch 174/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 136.8362

Epoch 175/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 148.6370

Epoch 176/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 158.0286

Epoch 177/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 141.7884

Epoch 178/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 147.9337

Epoch 179/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 154.1603

Epoch 180/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 154.1838

Epoch 181/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 150.1379

Epoch 182/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 156.4327

Epoch 183/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 134.4609

Epoch 184/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 160.5522

Epoch 185/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 147.1453

Epoch 186/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 154.0632

Epoch 187/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 144.0831

Epoch 188/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 150.7151

Epoch 189/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 136.8571

Epoch 190/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 135.1732

Epoch 191/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 137.9241

Epoch 192/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 136.0186

Epoch 193/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 134.3019

Epoch 194/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 141.5306

Epoch 195/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 135.0850

Epoch 196/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 144.5667

Epoch 197/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 125.2313

Epoch 198/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 149.8515

Epoch 199/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 141.7572

Epoch 200/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 130.6619

Epoch 201/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 135.2441

Epoch 202/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 127.7240

Epoch 203/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 127.8065

Epoch 204/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 137.7794

Epoch 205/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 146.9860

Epoch 206/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 127.8252

Epoch 207/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 130.4234

Epoch 208/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 132.7677

Epoch 209/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 114.8938

Epoch 210/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 116.9900

Epoch 211/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 133.5460

Epoch 212/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 134.7539

Epoch 213/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 128.9716

Epoch 214/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 129.9798

Epoch 215/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 135.8665

Epoch 216/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 144.2805

Epoch 217/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 132.0930

Epoch 218/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 124.8694

Epoch 219/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 123.3459

Epoch 220/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 112.7089

Epoch 221/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 124.6326

Epoch 222/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 121.4220

Epoch 223/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 135.1970

Epoch 224/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 132.0098

Epoch 225/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 133.0429

Epoch 226/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 133.6449

Epoch 227/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 114.6583

Epoch 228/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 128.4831

Epoch 229/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 107.8625

Epoch 230/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 121.0244

Epoch 231/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 118.2615

Epoch 232/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 106.0189

Epoch 233/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 115.0883

Epoch 234/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 117.6901

Epoch 235/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 117.9611

Epoch 236/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 127.1438

Epoch 237/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 123.0915

Epoch 238/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 112.4292

Epoch 239/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 114.8128

Epoch 240/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 121.3928

Epoch 241/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 109.7632

Epoch 242/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 109.6159

Epoch 243/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 107.6086

Epoch 244/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 113.0586

Epoch 245/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 122.0522

Epoch 246/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 123.8989

Epoch 247/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 109.9891

Epoch 248/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 110.9619

Epoch 249/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 112.3977

Epoch 250/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 121.0450

Epoch 251/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 109.6687

Epoch 252/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 116.6328

Epoch 253/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 101.8951

Epoch 254/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 114.3931

Epoch 255/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 115.1778

Epoch 256/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 108.8030

Epoch 257/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 111.7545

Epoch 258/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 105.6219

Epoch 259/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 103.5011

Epoch 260/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 103.1182

Epoch 261/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 109.2176

Epoch 262/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 110.9092

Epoch 263/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 103.4928

Epoch 264/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 114.2918

Epoch 265/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 107.2105

Epoch 266/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 95.8227

Epoch 267/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 95.8710

Epoch 268/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 114.0242

Epoch 269/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 113.3242

Epoch 270/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 112.7452

Epoch 271/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 106.6497

Epoch 272/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 99.7950

Epoch 273/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 110.1738

Epoch 274/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 98.7826

Epoch 275/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 101.1113

Epoch 276/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 105.2054

Epoch 277/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 106.2163

Epoch 278/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 102.9722

Epoch 279/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 98.7896

Epoch 280/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 99.9146

Epoch 281/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 100.4631

Epoch 282/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 99.9397

Epoch 283/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 97.9689

Epoch 284/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 104.4407

Epoch 285/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 95.0935

Epoch 286/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 94.6481

Epoch 287/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 91.9910

Epoch 288/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 101.3947

Epoch 289/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 97.2643

Epoch 290/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 99.0821

Epoch 291/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 99.2928

Epoch 292/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 95.3537

Epoch 293/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 91.0572

Epoch 294/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 93.6690

Epoch 295/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 99.4029

Epoch 296/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 94.7956

Epoch 297/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 93.1213

Epoch 298/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 100.0078

Epoch 299/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 92.2811

Epoch 300/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 98.2945

Epoch 301/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 87.2818

Epoch 302/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 81.6855

Epoch 303/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 85.1392

Epoch 304/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 91.9547

Epoch 305/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 104.6748

Epoch 306/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 89.6401

Epoch 307/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 95.4545

Epoch 308/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 93.2002

Epoch 309/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.6925

Epoch 310/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 96.1266

Epoch 311/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 97.2183

Epoch 312/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 95.6421

Epoch 313/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 100.7745

Epoch 314/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 93.8464

Epoch 315/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 97.2276

Epoch 316/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 92.4249

Epoch 317/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 82.8214

Epoch 318/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 100.6023

Epoch 319/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 97.3923

Epoch 320/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 92.4045

Epoch 321/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 88.4857

Epoch 322/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 91.3486

Epoch 323/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 90.5023

Epoch 324/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.4853

Epoch 325/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 96.0249

Epoch 326/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 90.7003

Epoch 327/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 88.2257

Epoch 328/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 84.2927

Epoch 329/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 78.1323

Epoch 330/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 93.9746

Epoch 331/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 89.5721

Epoch 332/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 85.3476

Epoch 333/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 89.8428

Epoch 334/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 77.6122

Epoch 335/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 88.3317

Epoch 336/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 91.3066

Epoch 337/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 75.9710

Epoch 338/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.5845

Epoch 339/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 88.5974

Epoch 340/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 83.8194

Epoch 341/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 88.1539

Epoch 342/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.4118

Epoch 343/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 85.8944

Epoch 344/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 84.0135

Epoch 345/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 93.9673

Epoch 346/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 79.7789

Epoch 347/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.8723

Epoch 348/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 86.8363

Epoch 349/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 85.3261

Epoch 350/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 82.1875

Epoch 351/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 90.6763

Epoch 352/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 88.6860

Epoch 353/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.4099

Epoch 354/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 91.9289

Epoch 355/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 82.2850

Epoch 356/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 80.1962

Epoch 357/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 81.4833

Epoch 358/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 82.2067

Epoch 359/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 82.8368

Epoch 360/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 90.0198

Epoch 361/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 79.8819

Epoch 362/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 87.7232

Epoch 363/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 83.7993

Epoch 364/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 85.3579

Epoch 365/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 90.7295

Epoch 366/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 78.6360

Epoch 367/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 79.5359

Epoch 368/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 91.1886

Epoch 369/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 86.0802

Epoch 370/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.5607

Epoch 371/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 81.8459

Epoch 372/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 76.4533

Epoch 373/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 76.0760

Epoch 374/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 87.4452

Epoch 375/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 86.2291

Epoch 376/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 86.7767

Epoch 377/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 81.4394

Epoch 378/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 91.9835

Epoch 379/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.3892

Epoch 380/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 76.8661

Epoch 381/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.0296

Epoch 382/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 82.6744

Epoch 383/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 69.4027

Epoch 384/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 76.6991

Epoch 385/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 87.6670

Epoch 386/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 77.6589

Epoch 387/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 82.4870

Epoch 388/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 80.1093

Epoch 389/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 82.5548

Epoch 390/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 16ms/step - loss: 76.8090

Epoch 391/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 81.4444

Epoch 392/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 78.4127

Epoch 393/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 83.3394

Epoch 394/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 82.7117

Epoch 395/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 80.6367

Epoch 396/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 92.0327

Epoch 397/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 80.2188

Epoch 398/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 81.7899

Epoch 399/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 77.5997

Epoch 400/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 78.2855

Epoch 401/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 81.8096

Epoch 402/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 79.9805

Epoch 403/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 83.7968

Epoch 404/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 77.7279

Epoch 405/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 80.6422

Epoch 406/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 77.8255

Epoch 407/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 85.9207

Epoch 408/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 80.2450

Epoch 409/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 80.2823

Epoch 410/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 72.8231

Epoch 411/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 74.1868

Epoch 412/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.1507

Epoch 413/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 77.2519

Epoch 414/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 74.8994

Epoch 415/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 76.6379

Epoch 416/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 81.5947

Epoch 417/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 81.2921

Epoch 418/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 75.7814

Epoch 419/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 83.4091

Epoch 420/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 83.2368

Epoch 421/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 79.6807

Epoch 422/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 79.1929

Epoch 423/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 78.8397

Epoch 424/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 84.9124

Epoch 425/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 80.5572

Epoch 426/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 87.7752

Epoch 427/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.0531

Epoch 428/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 77.5774

Epoch 429/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 80.3310

Epoch 430/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 77.2449

Epoch 431/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 84.0443

Epoch 432/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 82.0993

Epoch 433/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 76.7066

Epoch 434/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 89.0438

Epoch 435/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 78.5876

Epoch 436/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 85.5911

Epoch 437/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 78.2316

Epoch 438/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 70.1622

Epoch 439/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 68.7558

Epoch 440/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 83.5849

Epoch 441/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 74.2438

Epoch 442/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 79.1622

Epoch 443/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.6416

Epoch 444/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 74.5388

Epoch 445/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 82.8456

Epoch 446/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 75.9340

Epoch 447/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 72.5901

Epoch 448/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 76.5318

Epoch 449/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 77.6360

Epoch 450/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 73.6370

Epoch 451/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 80.1876

Epoch 452/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 79.4755

Epoch 453/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 78.0991

Epoch 454/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 75.7690

Epoch 455/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 73.0166

Epoch 456/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 73.3771

Epoch 457/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 73.8156

Epoch 458/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 76.8916

Epoch 459/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 76.7707

Epoch 460/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 76.9650

Epoch 461/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 77.4121

Epoch 462/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 81.8295

Epoch 463/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 78.0754

Epoch 464/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 97.1548

Epoch 465/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 73.0095

Epoch 466/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 81.7165

Epoch 467/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 78.5228

Epoch 468/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 78.5047

Epoch 469/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 75.0725

Epoch 470/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 82.5374

Epoch 471/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 79.4458

Epoch 472/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 82.8779

Epoch 473/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 79.1691

Epoch 474/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 75.9858

Epoch 475/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 74.5119

Epoch 476/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 73.9745

Epoch 477/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 85.9955

Epoch 478/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 73.4420

Epoch 479/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 81.8375

Epoch 480/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 78.7761

Epoch 481/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 77.0681

Epoch 482/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 82.3831

Epoch 483/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 72.9930

Epoch 484/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 78.4397

Epoch 485/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.6012

Epoch 486/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 84.5314

Epoch 487/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 83.4128

Epoch 488/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 83.6444

Epoch 489/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 77.3585

Epoch 490/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 82.4787

Epoch 491/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 75.7676

Epoch 492/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 78.1167

Epoch 493/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 71.0695

Epoch 494/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 86.8403

Epoch 495/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 85.6359

Epoch 496/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 85.5344

Epoch 497/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 82.1130

Epoch 498/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 79.9643

Epoch 499/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 81.3805

Epoch 500/500

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 84.6995

4/4 ━━━━━━━━━━━━━━━━━━━━ 0s 40ms/step

Model: "sequential_4"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━┩ │ dense_12 (Dense) │ (None, 64) │ 128 │ ├──────────────────────────────────────┼─────────────────────────────┼─────────────────┤ │ dense_13 (Dense) │ (None, 64) │ 4,160 │ ├──────────────────────────────────────┼─────────────────────────────┼─────────────────┤ │ dense_14 (Dense) │ (None, 1) │ 65 │ └──────────────────────────────────────┴─────────────────────────────┴─────────────────┘

Total params: 13,061 (51.02 KB)

Trainable params: 4,353 (17.00 KB)

Non-trainable params: 0 (0.00 B)

Optimizer params: 8,708 (34.02 KB)

Gaussian Processes [Bishop and Nasrabadi, 2006]#

Mathematical Framework#

Basic Concepts#

A Gaussian Process (GP) is essentially an advanced form of a Gaussian (or normal) distribution, but instead of being over simple variables, it’s over functions. Imagine a GP as a method to predict or estimate a function based on known data points.

In mathematical terms, a GP is defined for a set of function values, where these values follow a Gaussian distribution. Specifically, for any selection of points from a set \(X\), the values that a function \(f\) takes at these points follow a joint Gaussian distribution.

The key to understanding GPs lies in two main concepts:

Mean Function: \(m: X \rightarrow Y\). This function gives the average expected value of the function \(f(x)\) at each point \(x\) in the set \(X\). It’s like predicting the average outcome based on the known data.

Kernel or Covariance Function: \(k: X \times X \rightarrow Y\). This function tells us how much two points in the set \(X\) are related or how they influence each other. It’s a way of understanding the relationship or similarity between different points in our data.

To apply GPs in a practical setting, we typically select several points in our input space \(X\), calculate the mean and covariance at these points, and then use this information to make predictions. This process involves working with vectors and matrices derived from the mean and kernel functions to graphically represent the Gaussian Process.

Note: In mathematical notation, for a set of points \( \mathbf{X}=x_1, \ldots, x_N \), the mean vector \( \mathbf{m} \) and covariance matrix \( \mathbf{K} \) are constructed from these points using the mean and kernel functions. Each element of \( \mathbf{m} \) and \( \mathbf{K} \) corresponds to the mean and covariance values calculated for these points.

Covariance Functions (Kernels)#

Covariance functions, or kernels, determine how a Gaussian Process (GP) generalizes from observed data. They are fundamental in defining the GP’s behavior.

Concept and Mathematical Representation:

Kernels measure the similarity between points in input space. The function \(k(x, x')\) computes the covariance between the outputs corresponding to inputs \(x\) and \(x'\).

For example, the Radial Basis Function (RBF) kernel is defined as \(k(x, x') = \exp\left(-\frac{1}{2l^2} \| x - x' \|^2\right)\), where \(l\) is the length-scale parameter.

Types of Kernels and Their Uses:

RBF Kernel: Suited for smooth functions. The length-scale \(l\) controls how rapidly the correlation decreases with distance.

Linear Kernel: \(k(x, x') = x^T x'\), useful for linear relationships.

Periodic Kernels: Capture periodic behavior, expressed as \(k(x, x') = \exp\left(-\frac{2\sin^2(\pi|x - x'|)}{l^2}\right)\).

In our context, the RBF Kernel will be used in most cases. More practical examples are in future chapters.

Hyperparameter Tuning:

Hyperparameters like \(l\) in RBF or periodicity in periodic kernels crucially affect GP modeling. Their tuning, often through methods like maximum likelihood, adapts the GP to the specific data structure.

Choosing the Right Kernel:

Involves understanding data characteristics. RBF is a default choice for many, but specific data patterns might necessitate different or combined kernels.

Mean and Variance#

The mean and variance functions in a Gaussian Process (GP) provide predictions and their uncertainties.

Mean Function - Mathematical Explanation:

The mean function, often denoted as \(m(x)\), gives the expected value of the function at each point. A common assumption is \(m(x) = 0\), although non-zero means can incorporate prior trends.

Variance Function - Quantifying Uncertainty:

The variance, denoted as \(\sigma^2(x)\), represents the uncertainty in predictions. It’s calculated as \(\sigma^2(x) = k(x, x) - K(X, x)^T[K(X, X) + \sigma^2_nI]^{-1}K(X, x)\), where \(K(X, x)\) and \(K(X, X)\) are covariance matrices, and \(\sigma^2_n\) is the noise term.

Practical Interpretation:

High variance at a point suggests low confidence in predictions there, guiding decisions on where more data might be needed or caution in using the predictions.

Mean and Variance in Predictions:

Together, they provide a probabilistic forecast. The mean offers the best guess, while the variance indicates reliability. This duo is key in risk-sensitive applications.

Gaussian Process - A Logical Processing Chain#

Just like other machine learning algorithm, the logical processing chain for a Gaussian Process (GP) involves thoese key steps:

Defining the Problem:

Start by identifying the problem to be solved using GP, such as regression, classification, or another task where predicting a continuous function is required.

Data Preparation:

Organise the data into a suitable format. This includes input features and corresponding target values.

Choosing a Kernel Function:

Select an appropriate kernel (covariance function) for the GP. The choice depends on the nature of the data and the problem.

Setting the Hyperparameters:

Initialise hyperparameters for the chosen kernel. These can include parameters like length-scale in the RBF kernel or periodicity in a periodic kernel.

Model Training:

Train the GP model by optimizing the hyperparameters. This usually involves maximizing the likelihood of the observed data under the GP model.

Prediction:

Use the trained GP model to make predictions. This involves computing the mean and variance of the GP’s posterior distribution.

Model Evaluation:

Evaluate the model’s performance using suitable metrics. For regression, this could be RMSE or MAE; for classification, accuracy or AUC.

Refinement:

Based on the evaluation, refine the model by adjusting hyperparameters or kernel choice, and retrain if necessary.

This chain provides a comprehensive overview of the steps involved in applying Gaussian Processes to a problem, from initial setup to prediction and evaluation.

Practical Examples#

You’ve now covered the essential concepts of Gaussian Processes. Next, let’s dive into a practical application by exploring a toy example of GP implementation in Python.

pip install GPy

Collecting GPy

Downloading GPy-1.13.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (2.3 kB)

Requirement already satisfied: numpy<2.0.0,>=1.7 in /usr/local/lib/python3.11/dist-packages (from GPy) (1.26.4)

Requirement already satisfied: six in /usr/local/lib/python3.11/dist-packages (from GPy) (1.17.0)

Collecting paramz>=0.9.6 (from GPy)

Downloading paramz-0.9.6-py3-none-any.whl.metadata (1.4 kB)

Requirement already satisfied: cython>=0.29 in /usr/local/lib/python3.11/dist-packages (from GPy) (3.0.11)

Collecting scipy<=1.12.0,>=1.3.0 (from GPy)

Downloading scipy-1.12.0-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (60 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 60.4/60.4 kB 4.2 MB/s eta 0:00:00

?25hRequirement already satisfied: decorator>=4.0.10 in /usr/local/lib/python3.11/dist-packages (from paramz>=0.9.6->GPy) (4.4.2)

Downloading GPy-1.13.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.8/3.8 MB 15.2 MB/s eta 0:00:00

?25hDownloading paramz-0.9.6-py3-none-any.whl (103 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 103.2/103.2 kB 10.1 MB/s eta 0:00:00

?25hDownloading scipy-1.12.0-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (38.4 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 38.4/38.4 MB 21.0 MB/s eta 0:00:00

?25hInstalling collected packages: scipy, paramz, GPy

Attempting uninstall: scipy

Found existing installation: scipy 1.13.1

Uninstalling scipy-1.13.1:

Successfully uninstalled scipy-1.13.1

Successfully installed GPy-1.13.2 paramz-0.9.6 scipy-1.12.0

import numpy as np

import matplotlib.pyplot as plt

import GPy

np.random.seed(42)

X = np.random.rand(100, 1) * 10 # Predictor variable

y = 3 - 2 * X + X ** 2 + np.random.randn(100, 1) * 10

kernel = GPy.kern.RBF(input_dim=1, variance=1., lengthscale=10.)

gp = GPy.models.GPRegression(X, y.reshape(-1, 1), kernel)

gp.optimize(messages=True)

X_pred = np.linspace(0, 10, 1000).reshape(-1, 1)

y_pred, variance = gp.predict(X_pred)

sigma = np.sqrt(variance)

plt.figure()

plt.plot(X, y, 'r.', markersize=10, label='Observations')

plt.plot(X_pred, y_pred, 'b-', label='Prediction')

plt.fill_between(X_pred.ravel(), (y_pred - 1.96*sigma).flatten(), (y_pred + 1.96*sigma).flatten(), alpha=0.2, color='blue')

plt.title('Gaussian Process Regression with GPy')

plt.legend()

plt.show()

The processing chain in this script:

A synthetic dataset is generated, consisting of points along a sine curve with added Gaussian noise.

A Gaussian Process model with a Radial Basis Function (RBF) kernel is defined and fit to the data.

The model is used to predict values over a range, and the standard deviation (

sigma) of the predictions is calculated.The predictions, along with the 95% confidence intervals (calculated as 1.96 times the standard deviation), are plotted.